vision

Human Brain Compresses Working Memories into Low-Res ‘Summaries’

Posted on by Lawrence Tabak, D.D.S., Ph.D.

You have probably done it already a few times today. Paused to remember a password, a shopping list, a phone number, or maybe the score to last night’s ballgame. The ability to store and recall needed information, called working memory, is essential for most of the human brain’s higher cognitive processes.

Researchers are still just beginning to piece together how working memory functions. But recently, NIH-funded researchers added an intriguing new piece to this neurobiological puzzle: how visual working memories are “formatted” and stored in the brain.

The findings, published in the journal Neuron, show that the visual cortex—the brain’s primary region for receiving, integrating, and processing visual information from the eye’s retina—acts more like a blackboard than a camera. That is, the visual cortex doesn’t photograph all the complex details of a visual image, such as the color of paper on which your password is written or the precise series of lines that make up the letters. Instead, it recodes visual information into something more like simple chalkboard sketches.

The discovery suggests that those pared down, low-res representations serve as a kind of abstract summary, capturing the relevant information while discarding features that aren’t relevant to the task at hand. It also shows that different visual inputs, such as spatial orientation and motion, may be stored in virtually identical, shared memory formats.

The new study, from Clayton Curtis and Yuna Kwak, New York University, New York, builds upon a known fundamental aspect of working memory. Many years ago, it was determined that the human brain tends to recode visual information. For instance, if passed a 10-digit phone number on a card, the visual information gets recoded and stored in the brain as the sounds of the numbers being read aloud.

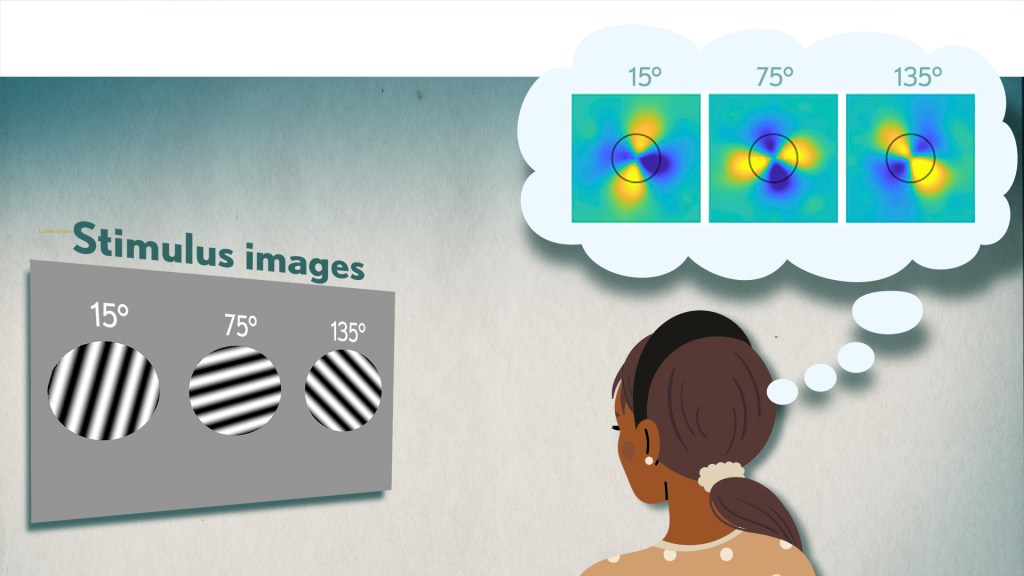

Curtis and Kwak wanted to learn more about how the brain formats representations of working memory in patterns of brain activity. To find out, they measured brain activity with functional magnetic resonance imaging (fMRI) while participants used their visual working memory.

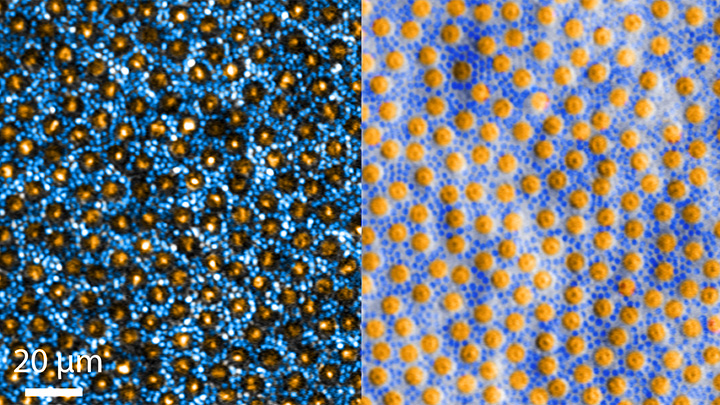

In each test, study participants were asked to remember a visual stimulus presented to them for 12 seconds and then make a memory-based judgment on what they’d just seen. In some trials, as shown in the image above, participants were shown a tilted grating, a series of black and white lines oriented at a particular angle. In others, they observed a cloud of dots, all moving in a direction to represent those same angles. After a short break, participants were asked to recall and precisely indicate the angle of the grating’s tilt or the dot cloud’s motion as accurately as possible.

It turned out that either visual stimulus—the grating or moving dots—resulted in the same patterns of neural activity in the visual cortex and parietal cortex. The parietal cortex is a part of the brain used in memory processing and storage.

These two distinct visual memories carrying the same relevant information seemed to have been recoded into a shared abstract memory format. As a result, the pattern of brain activity trained to recall motion direction was indistinguishable from that trained to recall the grating orientation.

This result indicated that only the task-relevant features of the visual stimuli had been extracted and recoded into a shared memory format. But Curtis and Kwak wondered whether there might be more to this finding.

To take a closer look, they used a sophisticated model that allowed them to project the three-dimensional patterns of brain activity into a more-informative, two-dimensional representation of visual space. And, indeed, their analysis of the data revealed a line-like pattern, similar to a chalkboard sketch that’s oriented at the relevant angles.

The findings suggest that participants weren’t actually remembering the grating or a complex cloud of moving dots at all. Instead, they’d compressed the images into a line representing the angle that they’d been asked to remember.

Many questions remain about how remembering a simple angle, a relatively straightforward memory formation, will translate to the more-complex sets of information stored in our working memory. On a technical level, though, the findings show that working memory can now be accessed and captured in ways that hadn’t been possible before. This will help to delineate the commonalities in working memory formation and the possible differences, whether it’s remembering a password, a shopping list, or the score of your team’s big victory last night.

Reference:

[1] Unveiling the abstract format of mnemonic representations. Kwak Y, Curtis CE. Neuron. 2022, April 7; 110(1-7).

Links:

Working Memory (National Institute of Mental Health/NIH)

The Curtis Lab (New York University, New York)

NIH Support: National Eye Institute

Artificial Intelligence Getting Smarter! Innovations from the Vision Field

Posted on by Michael F. Chiang, M.D., National Eye Institute

One of many health risks premature infants face is retinopathy of prematurity (ROP), a leading cause of childhood blindness worldwide. ROP causes abnormal blood vessel growth in the light-sensing eye tissue called the retina. Left untreated, ROP can lead to lead to scarring, retinal detachment, and blindness. It’s the disease that caused singer and songwriter Stevie Wonder to lose his vision.

Now, effective treatments are available—if the disease is diagnosed early and accurately. Advancements in neonatal care have led to the survival of extremely premature infants, who are at highest risk for severe ROP. Despite major advancements in diagnosis and treatment, tragically, about 600 infants in the U.S. still go blind each year from ROP. This disease is difficult to diagnose and manage, even for the most experienced ophthalmologists. And the challenges are much worse in remote corners of the world that have limited access to ophthalmic and neonatal care.

Artificial intelligence (AI) is helping bridge these gaps. Prior to my tenure as National Eye Institute (NEI) director, I helped develop a system called i-ROP Deep Learning (i-ROP DL), which automates the identification of ROP. In essence, we trained a computer to identify subtle abnormalities in retinal blood vessels from thousands of images of premature infant retinas. Strikingly, the i-ROP DL artificial intelligence system outperformed even international ROP experts [1]. This has enormous potential to improve the quality and delivery of eye care to premature infants worldwide.

Of course, the promise of medical artificial intelligence extends far beyond ROP. In 2018, the FDA approved the first autonomous AI-based diagnostic tool in any field of medicine [2]. Called IDx-DR, the system streamlines screening for diabetic retinopathy (DR), and its results require no interpretation by a doctor. DR occurs when blood vessels in the retina grow irregularly, bleed, and potentially cause blindness. About 34 million people in the U.S. have diabetes, and each is at risk for DR.

As with ROP, early diagnosis and intervention is crucial to preventing vision loss to DR. The American Diabetes Association recommends people with diabetes see an eye care provider annually to have their retinas examined for signs of DR. Yet fewer than 50 percent of Americans with diabetes receive these annual eye exams.

The IDx-DR system was conceived by Michael Abramoff, an ophthalmologist and AI expert at the University of Iowa, Iowa City. With NEI funding, Abramoff used deep learning to design a system for use in a primary-care medical setting. A technician with minimal ophthalmology training can use the IDx-DR system to scan a patient’s retinas and get results indicating whether a patient should be sent to an eye specialist for follow-up evaluation or to return for another scan in 12 months.

Many other methodological innovations in AI have occurred in ophthalmology. That’s because imaging is so crucial to disease diagnosis and clinical outcome data are so readily available. As a result, AI-based diagnostic systems are in development for many other eye diseases, including cataract, age-related macular degeneration (AMD), and glaucoma.

Rapid advances in AI are occurring in other medical fields, such as radiology, cardiology, and dermatology. But disease diagnosis is just one of many applications for AI. Neurobiologists are using AI to answer questions about retinal and brain circuitry, disease modeling, microsurgical devices, and drug discovery.

If it sounds too good to be true, it may be. There’s a lot of work that remains to be done. Significant challenges to AI utilization in science and medicine persist. For example, researchers from the University of Washington, Seattle, last year tested seven AI-based screening algorithms that were designed to detect DR. They found under real-world conditions that only one outperformed human screeners [3]. A key problem is these AI algorithms need to be trained with more diverse images and data, including a wider range of races, ethnicities, and populations—as well as different types of cameras.

How do we address these gaps in knowledge? We’ll need larger datasets, a collaborative culture of sharing data and software libraries, broader validation studies, and algorithms to address health inequities and to avoid bias. The NIH Common Fund’s Bridge to Artificial Intelligence (Bridge2AI) project and NIH’s Artificial Intelligence/Machine Learning Consortium to Advance Health Equity and Researcher Diversity (AIM-AHEAD) Program project will be major steps toward addressing those gaps.

So, yes—AI is getting smarter. But harnessing its full power will rely on scientists and clinicians getting smarter, too.

References:

[1] Automated diagnosis of plus disease in retinopathy of prematurity using deep convolutional neural networks. Brown JM, Campbell JP, Beers A, Chang K, Ostmo S, Chan RVP, Dy J, Erdogmus D, Ioannidis S, Kalpathy-Cramer J, Chiang MF; Imaging and Informatics in Retinopathy of Prematurity (i-ROP) Research Consortium. JAMA Ophthalmol. 2018 Jul 1;136(7):803-810.

[2] FDA permits marketing of artificial intelligence-based device to detect certain diabetes-related eye problems. Food and Drug Administration. April 11, 2018.

[3] Multicenter, head-to-head, real-world validation study of seven automated artificial intelligence diabetic retinopathy screening systems. Lee AY, Yanagihara RT, Lee CS, Blazes M, Jung HC, Chee YE, Gencarella MD, Gee H, Maa AY, Cockerham GC, Lynch M, Boyko EJ. Diabetes Care. 2021 May;44(5):1168-1175.

Links:

Retinopathy of Prematurity (National Eye Institute/NIH)

Diabetic Eye Disease (NEI)

Michael Abramoff (University of Iowa, Iowa City)

Bridge to Artificial Intelligence (Common Fund/NIH)

[Note: Acting NIH Director Lawrence Tabak has asked the heads of NIH’s institutes and centers to contribute occasional guest posts to the blog as a way to highlight some of the cool science that they support and conduct. This is the second in the series of NIH institute and center guest posts that will run until a new permanent NIH director is in place.]

Preventing Glaucoma Vision Loss with ‘Big Data’

Posted on by Dr. Francis Collins

Each morning, more than 2 million Americans start their rise-and-shine routine by remembering to take their eye drops. The drops treat their open-angle glaucoma, the most-common form of the disease, caused by obstructed drainage of fluid where the eye’s cornea and iris meet. The slow drainage increases fluid pressure at the front of the eye. Meanwhile, at the back of the eye, fluid pushes on the optic nerve, causing its bundled fibers to fray and leading to gradual loss of side vision.

For many, the eye drops help to lower intraocular pressure and prevent vision loss. But for others, the drops aren’t sufficient and their intraocular pressure remains high. Such people will need next-level care, possibly including eye surgery, to reopen the clogged drainage ducts and slow this disease that disproportionately affects older adults and African Americans over age 40.

Credit: University of California San Diego

Sally Baxter, a physician-scientist with expertise in ophthalmology at the University of California, San Diego (UCSD), wants to learn how to predict who is at greatest risk for serious vision loss from open-angle and other forms of glaucoma. That way, they can receive more aggressive early care to protect their vision from this second-leading cause of blindness in the U.S..

To pursue this challenging research goal, Baxter has received a 2020 NIH Director’s Early Independence Award. Her research will build on the clinical observation that people with glaucoma frequently battle other chronic health problems, such as high blood pressure, diabetes, and heart disease. To learn more about how these and other chronic health conditions might influence glaucoma outcomes, Baxter has begun mining a rich source of data: electronic health records (EHRs).

In an earlier study of patients at UCSD, Baxter showed that EHR data helped to predict which people would need glaucoma surgery within the next six months [1]. The finding suggested that the EHR, especially information on a patient’s blood pressure and medications, could predict the risk for worsening glaucoma.

In her NIH-supported work, she’s already extended this earlier “Big Data” finding by analyzing data from more than 1,200 people with glaucoma who participate in NIH’s All of Us Research Program [2]. With consent from the participants, Baxter used their EHRs to train a computer to find telltale patterns within the data and then predict with 80 to 99 percent accuracy who would later require eye surgery.

The findings confirm that machine learning approaches and EHR data can indeed help in managing people with glaucoma. That’s true even when the EHR data don’t contain any information specific to a person’s eye health.

In fact, the work of Baxter and other groups have pointed to an especially important role for blood pressure in shaping glaucoma outcomes. Hoping to explore this lead further with the support of her Early Independence Award, Baxter also will enroll patients in a study to test whether blood-pressure monitoring smart watches can add important predictive information on glaucoma progression. By combining round-the-clock blood pressure data with EHR data, she hopes to predict glaucoma progression with even greater precision. She’s also exploring innovative ways to track whether people with glaucoma use their eye drops as prescribed, which is another important predictor of the risk of irreversible vision loss [3].

Glaucoma research continues to undergo great progress. This progress ranges from basic research to the development of new treatments and high-resolution imaging technologies to improve diagnostics. But Baxter’s quest to develop practical clinical tools hold great promise, too, and hopefully will help one day to protect the vision of millions of people with glaucoma around the world.

References:

[1] Machine learning-based predictive modeling of surgical intervention in glaucoma using systemic data from electronic health records. Baxter SL, Marks C, Kuo TT, Ohno-Machado L, Weinreb RN. Am J Ophthalmol. 2019 Dec; 208:30-40.

[2] Predictive analytics for glaucoma using data from the All of Us Research Program. Baxter SL, Saseendrakumar BR, Paul P, Kim J, Bonomi L, Kuo TT, Loperena R, Ratsimbazafy F, Boerwinkle E, Cicek M, Clark CR, Cohn E, Gebo K, Mayo K, Mockrin S, Schully SD, Ramirez A, Ohno-Machado L; All of Us Research Program Investigators. Am J Ophthalmol. 2021 Jul;227:74-86.

[3] Smart electronic eyedrop bottle for unobtrusive monitoring of glaucoma medication adherence. Aguilar-Rivera M, Erudaitius DT, Wu VM, Tantiongloc JC, Kang DY, Coleman TP, Baxter SL, Weinreb RN. Sensors (Basel). 2020 Apr 30;20(9):2570.

Links:

Glaucoma (National Eye Institute/NIH)

All of Us Research Program (NIH)

Video: Sally Baxter (All of Us Research Program)

Sally Baxter (University of California San Diego)

Baxter Project Information (NIH RePORTER)

NIH Director’s Early Independence Award (Common Fund)

NIH Support: Common Fund

The Amazing Brain: Making Up for Lost Vision

Posted on by Dr. Francis Collins

Recently, I’ve highlighted just a few of the many amazing advances coming out of the NIH-led Brain Research through Advancing Innovative Neurotechnologies® (BRAIN) Initiative. And for our grand finale, I’d like to share a cool video that reveals how this revolutionary effort to map the human brain is opening up potential plans to help people with disabilities, such as vision loss, that were once unimaginable.

This video, produced by Jordi Chanovas and narrated by Stephen Macknik, State University of New York Downstate Health Sciences University, Brooklyn, outlines a new strategy aimed at restoring loss of central vision in people with age-related macular degeneration (AMD), a leading cause of vision loss among people age 50 and older. The researchers’ ultimate goal is to give such people the ability to see the faces of their loved ones or possibly even read again.

In the innovative approach you see here, neuroscientists aren’t even trying to repair the part of the eye destroyed by AMD: the light-sensitive retina. Instead, they are attempting to recreate the light-recording function of the retina within the brain itself.

How is that possible? Normally, the retina streams visual information continuously to the brain’s primary visual cortex, which receives the information and processes it into the vision that allows you to read these words. In folks with AMD-related vision loss, even though many cells in the center of the retina have stopped streaming, the primary visual cortex remains fully functional to receive and process visual information.

About five years ago, Macknik and his collaborator Susana Martinez-Conde, also at Downstate, wondered whether it might be possible to circumvent the eyes and stream an alternative source of visual information to the brain’s primary visual cortex, thereby restoring vision in people with AMD. They sketched out some possibilities and settled on an innovative system that they call OBServ.

Among the vital components of this experimental system are tiny, implantable neuro-prosthetic recording devices. Created in the Macknik and Martinez-Conde labs, this 1-centimeter device is powered by induction coils similar to those in the cochlear implants used to help people with profound hearing loss. The researchers propose to surgically implant two of these devices in the rear of the brain, where they will orchestrate the visual process.

For technical reasons, the restoration of central vision will likely be partial, with the window of vision spanning only about the size of one-third of an adult thumbnail held at arm’s length. But researchers think that would be enough central vision for people with AMD to regain some of their lost independence.

As demonstrated in this video from the BRAIN Initiative’s “Show Us Your Brain!” contest, here’s how researchers envision the system would ultimately work:

• A person with vision loss puts on a specially designed set of glasses. Each lens contains two cameras: one to record visual information in the person’s field of vision; the other to track that person’s eye movements enabled by residual peripheral vision.

• The eyeglass cameras wirelessly stream the visual information they have recorded to two neuro-prosthetic devices implanted in the rear of the brain.

• The neuro-prosthetic devices process and project this information onto a specific set of excitatory neurons in the brain’s hard-wired visual pathway. Researchers have previously used genetic engineering to turn these neurons into surrogate photoreceptor cells, which function much like those in the eye’s retina.

• The surrogate photoreceptor cells in the brain relay visual information to the primary visual cortex for processing.

• All the while, the neuro-prosthetic devices perform quality control of the visual signals, calibrating them to optimize their contrast and clarity.

While this might sound like the stuff of science-fiction (and this actual application still lies several years in the future), the OBServ project is now actually conceivable thanks to decades of advances in the fields of neuroscience, vision, bioengineering, and bioinformatics research. All this hard work has made the primary visual cortex, with its switchboard-like wiring system, among the brain’s best-understood regions.

OBServ also has implications that extend far beyond vision loss. This project provides hope that once other parts of the brain are fully mapped, it may be possible to design equally innovative systems to help make life easier for people with other disabilities and conditions.

Links:

Age-Related Macular Degeneration (National Eye Institute/NIH)

Macknik Lab (SUNY Downstate Health Sciences University, Brooklyn)

Martinez-Conde Laboratory (SUNY Downstate Health Sciences University)

Show Us Your Brain! (BRAIN Initiative/NIH)

Brain Research through Advancing Innovative Neurotechnologies® (BRAIN) Initiative (NIH)

NIH Support: BRAIN Initiative

Taking Brain Imaging Even Deeper

Posted on by Dr. Francis Collins

Thanks to yet another amazing advance made possible by the NIH-led Brain Research through Advancing Innovative Neurotechnologies® (BRAIN) Initiative, I can now take you on a 3D fly-through of all six layers of the part of the mammalian brain that processes external signals into vision. This unprecedented view is made possible by three-photon microscopy, a low-energy imaging approach that is allowing researchers to peer deeply within the brains of living creatures without damaging or killing their brain cells.

The basic idea of multi-photon microscopy is this: for fluorescence microscopy to work, you want to deliver a specific energy level of photons (usually with a laser) to excite a fluorescent molecule, so that it will emit light at a slightly lower energy (longer wavelength) and be visualized as a burst of colored light in the microscope. That’s how fluorescence works. Green fluorescent protein (GFP) is one of many proteins that can be engineered into cells or mice to make that possible.

But for that version of the approach to work on tissue, the excited photons need to penetrate deeply, and that’s not possible for such high energy photons. So two-photon strategies were developed, where it takes the sum of the energy of two simultaneous photons to hit the target in order to activate the fluorophore.

That approach has made a big difference, but for deep tissue penetration the photons are still too high in energy. Enter the three-photon version! Now the even lower energy of the photons makes tissue more optically transparent, though for activation of the fluorescent protein, three photons have to hit it simultaneously. But that’s part of the beauty of the system—the visual “noise” also goes down.

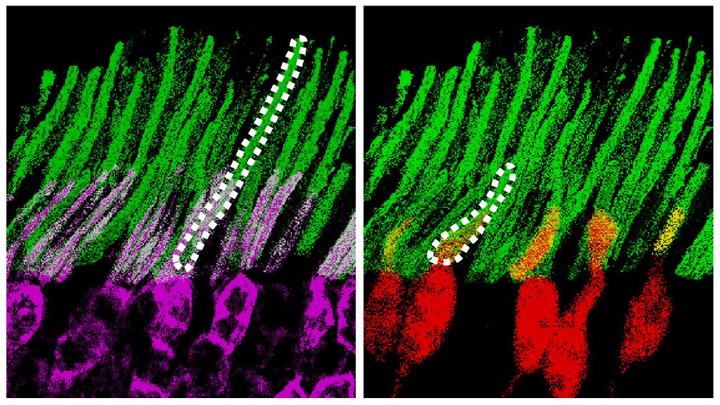

This particular video shows what takes place in the visual cortex of mice when objects pass before their eyes. As the objects appear, specific neurons (green) are activated to process the incoming information. Nearby, and slightly obscuring the view, are the blood vessels (pink, violet) that nourish the brain. At 33 seconds into the video, you can see the neurons’ myelin sheaths (pink) branching into the white matter of the brain’s subplate, which plays a key role in organizing the visual cortex during development.

This video comes from a recent paper in Nature Communications by a team from Massachusetts Institute of Technology, Cambridge [1]. To obtain this pioneering view of the brain, Mriganka Sur, Murat Yildirim, and their colleagues built an innovative microscope that emits three low-energy photons. After carefully optimizing the system, they were able to peer more than 1,000 microns (0.05 inches) deep into the visual cortex of a live, alert mouse, far surpassing the imaging capacity of standard one-photon microscopy (100 microns) and two-photon microscopy (400-500 microns).

This improved imaging depth allowed the team to plumb all six layers of the visual cortex (two-photon microscopy tops out at about three layers), as well as to record in real time the brain’s visual processing activities. Helping the researchers to achieve this feat was the availability of a genetically engineered mouse model in which the cells of the visual cortex are color labelled to distinguish blood vessels from neurons, and to show when neurons are active.

During their in-depth imaging experiments, the MIT researchers found that each of the visual cortex’s six layers exhibited different responses to incoming visual information. One of the team’s most fascinating discoveries is that neurons residing on the subplate are actually quite active in adult animals. It had been assumed that these subplate neurons were active only during development. Their role in mature animals is now an open question for further study.

Sur often likens the work in his neuroscience lab to astronomers and their perpetual quest to see further into the cosmos—but his goal is to see ever deeper into the brain. His group, along with many other researchers supported by the BRAIN Initiative, are indeed proving themselves to be biological explorers of the first order.

Reference:

[1] Functional imaging of visual cortical layers and subplate in awake mice with optimized three-photon microscopy. Yildirim M, Sugihara H, So PTC, Sur M. Nat Commun. 2019 Jan 11;10(1):177.

Links:

Sur Lab (Massachusetts Institute of Technology, Cambridge)

The Brain Research through Advancing Innovative Neurotechnologies® (BRAIN) Initiative (NIH)

NIH Support: National Eye Institute; National Institute of Neurological Disorders and Stroke; National Institute of Biomedical Imaging and Bioengineering

‘Nanoantennae’ Make Infrared Vision Possible

Posted on by Dr. Francis Collins

Credit: Ma et al. Cell, 2019

Infrared vision often brings to mind night-vision goggles that allow soldiers to see in the dark, like you might have seen in the movie Zero Dark Thirty. But those bulky goggles may not be needed one day to scope out enemy territory or just the usual things that go bump in the night. In a dramatic advance that brings together material science and the mammalian vision system, researchers have just shown that specialized lab-made nanoparticles applied to the retina, the thin tissue lining the back of the eye, can extend natural vision to see in infrared light.

The researchers showed in mouse studies that their specially crafted nanoparticles bind to the retina’s light-sensing cells, where they act like “nanoantennae” for the animals to see and recognize shapes in infrared—day or night—for at least 10 weeks. Even better, the mice maintained their normal vision the whole time and showed no adverse health effects. In fact, some of the mice are still alive and well in the lab, although their ability to see in infrared may have worn off.

When light enters the eyes of mice, humans, or any mammal, light-sensing cells in the retina absorb wavelengths within the range of visible light. (That’s roughly from 400 to 700 nanometers.) While visible light includes all the colors of the rainbow, it actually accounts for only a fraction of the full electromagnetic spectrum. Left out are the longer wavelengths of infrared light. That makes infrared light invisible to the naked eye.

In the study reported in the journal Cell, an international research team including Gang Han, University of Massachusetts Medical School, Worcester, wanted to find a way for mammalian light-sensing cells to absorb and respond to the longer wavelengths of infrared [1]. It turns out Han’s team had just the thing to do it.

His NIH-funded team was already working on the nanoparticles now under study for application in a field called optogenetics—the use of light to control living brain cells [2]. Optogenetics normally involves the stimulation of genetically modified brain cells with blue light. The trouble is that blue light doesn’t penetrate brain tissue well.

That’s where Han’s so-called upconversion nanoparticles (UCNPs) came in. They attempt to get around the normal limitations of optogenetic tools by incorporating certain rare earth metals. Those metals have a natural ability to absorb lower energy infrared light and convert it into higher energy visible light (hence the term upconversion).

But could those UCNPs also serve as miniature antennae in the eye, receiving infrared light and emitting readily detected visible light? To find out in mouse studies, the researchers injected a dilute solution containing UCNPs into the back of eye. Such sub-retinal injections are used routinely by ophthalmologists to treat people with various eye problems.

These UCNPs were modified with a protein that allowed them to stick to light-sensing cells. Because of the way that UCNPs absorb and emit wavelengths of light energy, they should to stick to the light-sensing cells and make otherwise invisible infrared light visible as green light.

Their hunch proved correct, as mice treated with the UCNP solution began seeing in infrared! How could the researchers tell? First, they shined infrared light into the eyes of the mice. Their pupils constricted in response just as they would with visible light. Then the treated mice aced a series of maneuvers in the dark that their untreated counterparts couldn’t manage. The treated animals also could rely on infrared signals to make out shapes.

The research is not only fascinating, but its findings may also have a wide range of intriguing applications. One could imagine taking advantage of the technology for use in hiding encrypted messages in infrared or enabling people to acquire a temporary, built-in ability to see in complete darkness.

With some tweaks and continued research to confirm the safety of these nanoparticles, the system might also find use in medicine. For instance, the nanoparticles could potentially improve vision in those who can’t see certain colors. While such infrared vision technologies will take time to become more widely available, it’s a great example of how one area of science can cross-fertilize another.

References:

[1] Mammalian Near-Infrared Image Vision through Injectable and Self-Powered Retinal Nanoantennae. Ma Y, Bao J, Zhang Y, Li Z, Zhou X, Wan C, Huang L, Zhao Y, Han G, Xue T. Cell. 2019 Feb 27. [Epub ahead of print]

[2] Near-Infrared-Light Activatable Nanoparticles for Deep-Tissue-Penetrating Wireless Optogenetics. Yu N, Huang L, Zhou Y, Xue T, Chen Z, Han G. Adv Healthc Mater. 2019 Jan 11:e1801132.

Links:

Diagram of the Eye (National Eye Institute/NIH)

Infrared Waves (NASA)

Visible Light (NASA)

Han Lab (University of Massachusetts, Worcester)

NIH Support: National Institute of Mental Health; National Institute of General Medical Sciences

Studying Color Vision in a Dish

Posted on by Dr. Francis Collins

Credit: Eldred et al., Science

Researchers can now grow miniature versions of the human retina—the light-sensitive tissue at the back of the eye—right in a lab dish. While most “retina-in-a-dish” research is focused on finding cures for potentially blinding diseases, these organoids are also providing new insights into color vision.

Our ability to view the world in all of its rich and varied colors starts with the retina’s light-absorbing cone cells. In this image of a retinal organoid, you see cone cells (blue and green). Those labelled with blue produce a visual pigment that allows us to see the color blue, while those labelled green make visual pigments that let us see green or red. The cells that are labeled with red show the highly sensitive rod cells, which aren’t involved in color vision, but are very important for detecting motion and seeing at night.

A Ray of Molecular Beauty from Cryo-EM

Posted on by Dr. Francis Collins

Credit: Subramaniam Lab, National Cancer Institute, NIH

Walk into a dark room, and it takes a minute to make out the objects, from the wallet on the table to the sleeping dog on the floor. But after a few seconds, our eyes are able to adjust and see in the near-dark, thanks to a protein called rhodopsin found at the surface of certain specialized cells in the retina, the thin, vision-initiating tissue that lines the back of the eye.

This illustration shows light-activating rhodopsin (orange). The light photons cause the activated form of rhodopsin to bind to its protein partner, transducin, made up of three subunits (green, yellow, and purple). The binding amplifies the visual signal, which then streams onward through the optic nerve for further processing in the brain—and the ability to avoid tripping over the dog.

Next Page