Study Suggests During Sleep, Neural Process Helps Clear the Brain of Damaging Waste

Posted on by Dr. Monica M. Bertagnolli

We’ve long known that sleep is a restorative process necessary for good health. Research has also shown that the accumulation of waste products in the brain is a leading cause of numerous neurological disorders, including Alzheimer’s and Parkinson’s diseases. What hasn’t been clear is how the healthy brain “self-cleans,” or flushes out that detrimental waste.

But a new study by a research team supported in part by NIH suggests that a neural process that happens while we sleep helps cleanse the brain, leading us to wake up feeling rested and restored. Better understanding this process could one day lead to methods that help people function well on less sleep. It could also help researchers find potential ways to delay or prevent neurological diseases related to accumulated waste products in the brain.

The findings, reported in Nature, show that, during sleep, neural networks in the brain act like an array of miniature pumps, producing large and rhythmic waves through synchronous bursts of activity that propel fluids through brain tissue. Much like the process of washing dishes, where you use a rhythmic motion of varying speeds and intensity to clear off debris, this process that takes place during sleep clears accumulated metabolic waste products out.

The research team, led by Jonathan Kipnis and Li-Feng Jiang-Xie at Washington University School of Medicine in St. Louis, wanted to better understand how the brain manages its waste. This is not an easy task, given that the human brain’s billions of neurons inevitably produce plenty of junk during cognitive processes that allow us to think, feel, move, and solve problems. Those waste products also build in a complex environment, including a packed maze of interconnected neurons, blood vessels, and interstitial spaces, surrounded by a protective blood-brain barrier that limits movement of substances in or out.

So, how does the brain move fluid through those tight spaces with the force required to get waste out? Earlier research suggested that neural activity during sleep might play an important role in those waste-clearing dynamics. But previous studies hadn’t pinned down the way this works.

To learn more in the new study, the researchers recorded brain activity in mice. They also used an ultrathin silicon probe to measure fluid dynamics in the brain’s interstitial spaces. In awake mice, they saw irregular neural activity and only minor fluctuations in the interstitial spaces. But when the animals were resting under anesthesia, the researchers saw a big change. Brain recordings showed strongly enhanced neural activity, with two distinct but tightly coupled rhythms. The research team realized that the structured wave patterns could generate strong energy that could move small molecules and peptides, or waste products, through the tight spaces within brain tissue.

To make sure that the fluid dynamics were really driven by neurons, the researchers used tools that allowed them to turn neural activity off in some areas. Those experiments showed that, when neurons stopped firing, the waves also stopped. They went on to show similar dynamics during natural sleep in the animals and confirmed that disrupting these neuron-driven fluid dynamics impaired the brain’s ability to clear out waste.

These findings highlight the importance of this cleansing process during sleep for brain health. The researchers now want to better understand how specific patterns and variations in those brain waves lead to changes in fluid movement and waste clearance. This could help researchers eventually find ways to speed up the removal of damaging waste, potentially preventing or delaying certain neurological diseases and allowing people to need less sleep.

Reference:

[1] Jiang-Xie LF, et al. Neuronal dynamics direct cerebrospinal fluid perfusion and brain clearance. Nature. DOI: 10.1038/s41586-024-07108-6 (2024).

NIH Support: National Center for Complementary and Integrative Health

Healing Switch Links Acute Kidney Injury to Fibrosis, Suggesting Way to Protect Kidney Function

Posted on by Dr. Monica M. Bertagnolli

Healthy kidneys—part of the urinary tract—remove waste and help balance chemicals and fluids in the body. However, our kidneys have a limited ability to regenerate healthy tissue after sustaining injuries from conditions such as diabetes or high blood pressure. Injured kidneys are often left with a mix of healthy and scarred tissue, or fibrosis, which over time can compromise their function and lead to chronic kidney disease or complete kidney failure. More than one in seven adults in the U.S. are estimated to have chronic kidney disease, according to the Centers for Disease Control and Prevention, most without knowing it.

Now, a team of researchers led by Sanjeev Kumar at Cedars-Sinai Medical Center, Los Angeles, has identified a key molecular “switch” that determines whether injured kidney tissue will heal or develop those damaging scars.1 Their findings, reported in the journal Science, could lead to new and less invasive ways to detect fibrosis in the kidneys. The research could also point toward a targeted therapeutic approach that might prevent or reverse scarring to protect kidney function.

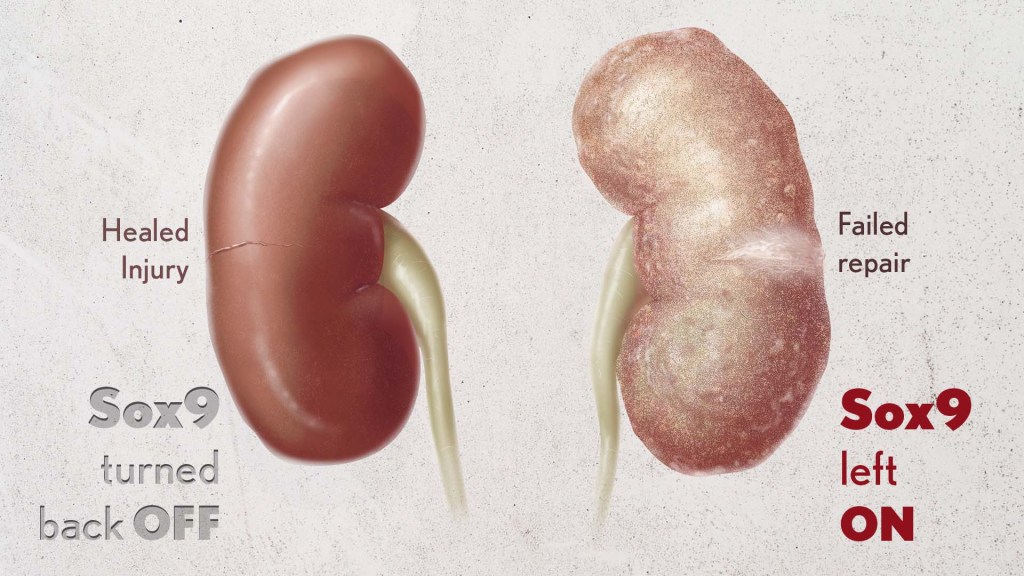

In earlier studies, the research team found that a protein called Sox9 plays an important role in switching on the repair response in kidneys after acute injury.2 In some cases, the researchers noticed that Sox9 remained active for a prolonged period of a month or more. They suspected this might be a sign of unresolved injury and repair.

By conducting studies using animal models of kidney damage, the researchers found that cells that turned Sox9 on and then back off healed without fibrosis. However, cells that failed to regenerate healthy kidney cells kept Sox9 on indefinitely, which in turn led to the production of fibrosis and scarring.

According to Kumar, Sox9 appears to act like a sensor, switching on after injury. Once restored to health, Sox9 switches back off. When healing doesn’t proceed optimally, Sox9 stays on, leading to scarring. Importantly, the researchers also found they could encourage kidneys to recover by forcing Sox9 to turn off a week after an injury, suggesting it may be a promising drug target.

The researchers also looked for evidence of this process in human patients who have received kidney transplants. They could see that, when transplanted kidneys took longer to start working, Sox9 was switched on. Those whose kidneys continued to produce Sox9 also had lower kidney function and more scarring compared to those who didn’t.

The findings suggest that the dynamics observed in animal studies may be clinically relevant in people, and that treatments targeting Sox9 might promote kidneys to heal instead of scarring. The researchers say they hope that similar studies in the future will lead to greater understanding of healing and fibrosis in other organs—including the heart, lungs, and liver—with potentially important clinical implications.

References:

[1] Aggarwal S, et al. SOX9 switch links regeneration to fibrosis at the single-cell level in mammalian kidneys. Science. DOI: 10.1126/science.add6371 (2024).

[2] Kumar S, et al. Sox9 Activation Highlights a Cellular Pathway of Renal Repair in the Acutely Injured Mammalian Kidney. Cell Reports. DOI: 10.1016/j.celrep.2015.07.034 (2015).

NIH Support: National Institute of Diabetes and Digestive and Kidney Diseases

Aided by AI, Study Uncovers Hidden Sex Differences in Dynamic Brain Function

Posted on by Dr. Monica M. Bertagnolli

We’re living in an especially promising time for biomedical discovery and advances in the delivery of data-driven health care for everyone. A key part of this is the tremendous progress made in applying artificial intelligence to study human health and ultimately improve clinical care in many important and sometimes surprising ways.1 One new example of this comes from a fascinating study, supported in part by NIH, that uses AI approaches to reveal meaningful sex differences in the way the brain works.

As reported in the Proceedings of the National Academy of Sciences, researchers led by Vinod Menon at Stanford Medicine, Stanford, CA, have built an AI model that can—nine times out of ten—tell whether the brain in question belongs to a female or male based on scans of brain activity alone.2 These findings not only help resolve long-term debates about whether reliable differences between sexes exist in the human brain, but they’re also a step toward improving our understanding of why some psychiatric and neurological disorders affect women and men differently.

The prevalence of certain psychiatric and neurological disorders in men and women can vary significantly, leading researchers to suspect that sex differences in brain function likely exist. For example, studies have found that females are more likely to experience depression, anxiety, and eating disorders, while autism, Attention-Deficit/Hyperactivity Disorder, and schizophrenia are seen more often in males. But earlier research to understand sex differences in the brain have focused mainly on anatomical and structural studies of brain regions and their connections. Much less is known about how those structural differences translate into differences in brain activity and function.

To help fill those gaps in the new study, Menon’s team took advantage of vast quantities of brain activity data from MRI scans from the NIH-supported Human Connectome Project. The data was captured from hundreds of healthy young adults with the goal of studying the brain and how it changes with growth, aging, and disease. To use this data to explore sex differences in brain function, the researchers developed what’s known as a deep neural network model in which a computer “learned” how to recognize patterns in brain activity data that could distinguish a male from a female brain.

This approach doesn’t rely on any preconceived notions about what features might be important. A computer is simply shown many examples of brain activity belonging to males and females and, over time, can begin to pick up on otherwise hidden differences that are useful for making such classifications accurately. One of the things that made this work different from earlier attempts was it relied on dynamic scans of brain activity, which capture the interplay among brain regions.

After analyzing about 1,500 brain scans, a computer could usually (although not always) tell whether a scan came from a male or female brain. The findings also showed the model worked reliably well in different datasets and in brain scans for people in different places in the U.S. and Europe. Overall, the findings confirm that reliable sex differences in brain activity do exist.

Where did the model find those differences? To get an idea, the researchers turned to an approach called explainable AI, which allowed them to dig deeper into the specific features and brain areas their model was using to pick up on sex differences. It turned out that one set of areas the model was relying on to distinguish between male and female brains is what’s known as the default mode network. This area is responsible for processing self-referential information and constructing a coherent sense of the self and activates especially when people let their minds wander.3 Other important areas included the striatum and limbic network, which are involved in learning and how we respond to rewards, respectively.

Many questions remain, including whether such differences arise primarily due to inherent biological differences between the sexes or what role societal circumstances play. But the researchers say that the discovery already shows that sex differences in brain organization and function may play important and overlooked roles in mental health and neuropsychiatric disorders. Their AI model can now also be applied to begin to explain other kinds of brain differences, including those that may affect learning or social behavior. It’s an exciting example of AI-driven progress and good news for understanding variations in human brain functions and their implications for our health.

References:

[1] Bertagnolli, MM. Advancing health through artificial intelligence/machine learning: The critical importance of multidisciplinary collaboration. PNAS Nexus. DOI: 10.1093/pnasnexus/pgad356 (2023).

[2] Ryali S, et al. Deep learning models reveal replicable, generalizable, and behaviorally relevant sex differences in human functional brain organization. Proc Natl Acad Sci. DOI: 10.1073/pnas.2310012121 (2024).

[3] Menon, V. 20 years of the default mode network: A review and synthesis. Neuron.DOI: 10.1016/j.neuron.2023.04.023 (2023).

NIH Support: National Institute of Mental Health, National Institute of Biomedical Imaging and Bioengineering, Eunice Kennedy Shriver National Institute of Child Health and Human Development, National Institute on Aging

Celebrating New Clinical Center Exhibit for Nobel Laureate Dr. Harvey Alter

Posted on by Dr. Monica M. Bertagnolli

Earlier this month, I had the great honor of attending the opening of an exhibit at the NIH Clinical Center commemorating the distinguished career of Dr. Harvey Alter. Harvey’s collaborators, colleagues, and family members joined him to celebrate this display dedicated to his groundbreaking hepatitis C work developed by the Office of NIH History and Stetten Museum.

As I remarked at the event, we at NIH are proud to be able to claim Harvey as our own. He has spent almost the entirety of his professional career at the Clinical Center, working as a scientist in the Department of Transfusion Medicine since the 1960s.

Those who view this permanent exhibit will learn about how Harvey’s dedicated research has transformed the safety of the U.S. blood supply. Before the 1970s, nearly a third of patients who received multiple, lifesaving blood transfusions contracted hepatitis. Today, the risk of contracting hepatitis from a blood transfusion is essentially zero, thanks largely to Harvey’s research advances, including his work to identify the hepatitis C virus, which earned him the 2020 Nobel Prize in Physiology or Medicine. A cure for hepatitis C became available in 2014, and former NIH Director Dr. Francis Collins, who was at the event, has been working with President Biden to ensure greater access to these medications as part of an effort to eliminate hepatitis C in this country. This important work would not have been possible without Harvey’s foundational discoveries. Harvey is one of six Nobelists who did the entirety of their award-winning research at NIH as federal scientists, and the only NIH Nobel laureate to be recognized for clinical research.

This exhibit in the busy halls of the Clinical Center is a good reminder to the many who pass by of why we do what we do: It can take long hours and many years, but we can make a significant impact in clinical care when we try to understand the root causes of problems. Please stop by when you’re there to learn more about Harvey’s remarkable career.

A Potential New Way to Prevent Noise-Induced Hearing Loss: Trapping Excess Zinc

Posted on by Dr. Monica M. Bertagnolli

Hearing loss is a pervasive problem, affecting one in eight people aged 12 and up in the U.S.1 While hearing loss has multiple causes, an important one for millions of people is exposure to loud noises, which can lead to gradual hearing loss, or people can lose their hearing all at once. The only methods used to prevent noise-induced hearing loss today are avoiding loud noises altogether or wearing earplugs or other protective devices during loud activities. But findings from an intriguing new NIH-supported study exploring the underlying causes of this form of hearing loss suggest it may be possible to protect hearing in a different way: with treatments targeting excess and damaging levels of zinc in the inner ear.

The new findings, reported in the Proceedings of the National Academy of Sciences, come from a team led by Thanos Tzounopoulos, Amantha Thathiah, and Chris Cunningham, at the University of Pittsburgh.2 The research team is focused on understanding how hearing works, as well as developing ways to treat hearing loss and tinnitus (the perception of sound, like ringing or buzzing, that doesn’t have an external source), which both can arise from loud noises.

Previous studies have shown that traumatic noises of varying durations and intensities can lead to different types of damage to cells in the cochlea, the fluid-filled cavity in the inner ear that plays an essential role in hearing. For instance, in mouse studies, noise equivalent to a blasting rock concert caused the loss of tiny sound-detecting hair cells and essential supporting cells in the cochlea, leading to hearing loss. Milder noises comparable to the sound of a hand drill can lead to subtler hearing loss, as essential connections, or synapses, between hair cells and sensory neurons are lost.

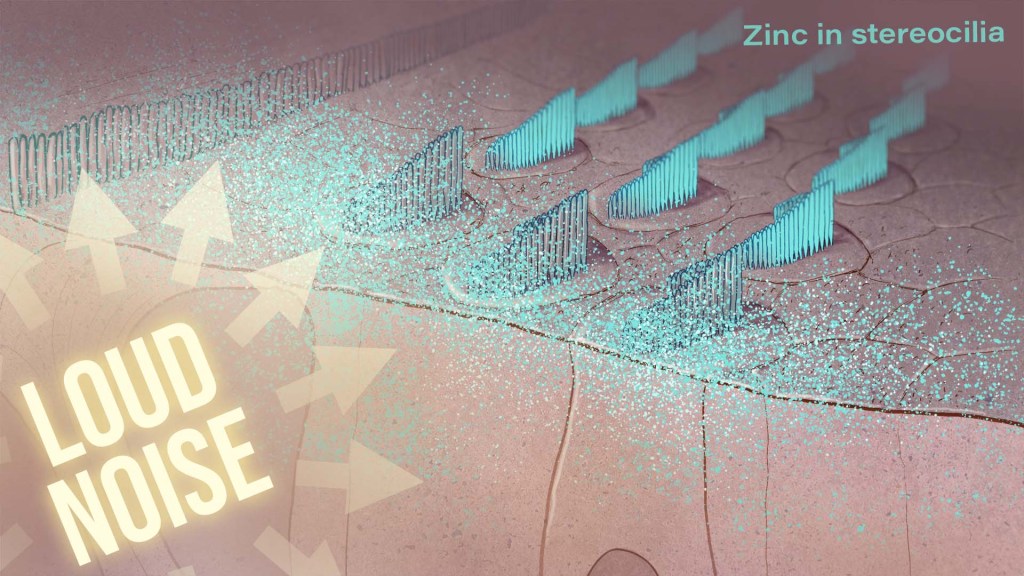

To better understand why this happens, the research team wanted to investigate the underlying cellular- and molecular-level events and signals responsible for inner ear damage and irreversible hearing loss caused by loud sounds. They looked to zinc, an essential mineral in our diets that plays many important roles in the body. Interestingly, zinc concentrations in the inner ear are highest of any organ or tissue in the body. But, despite this, the role of zinc in the cochlea and its effects on hearing and hearing loss hadn’t been studied in detail.

Most zinc in the body—about 90%—is bound to proteins. But the researchers were interested in the approximately 10% of zinc that’s free-floating, due to its important role in signaling in the brain and other parts of the nervous system. They wanted to find out what happens to the high concentrations of zinc in the mouse cochlea after traumatic levels of noise, and whether targeting zinc might influence inner ear damage associated with hearing loss.

The researchers found that, hours after mice were exposed to loud noise, zinc levels in the inner ear spiked and were dysregulated in the hair cells and in key parts of the cochlea, with significant changes to their location inside cells. Those changes in zinc were associated with cellular damage and disrupted communication between sensory cells in the inner ear.

The good news is that this discovery suggested a possible solution: inner ear damage and hearing loss might be averted by targeting excess zinc. And their subsequent findings suggest that it works. Studies in mice that were treated with a slow-releasing compound in the inner ear were protected from noise-induced damage and associated hearing loss. The treatment involves a chemical compound known as a zinc chelating agent, which binds and traps excess free zinc, thus limiting cochlear damage and hearing loss.

Will this strategy work in people? We don’t know yet. However, the researchers report that they’re planning to pursue preclinical safety studies of the new treatment approach. Their hope is to one day make a zinc-targeted treatment readily available to protect against noise-induced hearing loss. But, for now, the best way to protect your hearing while working with noisy power tools or attending a rock concert is to remember your ear protection.

References:

[1] Quick Statistics About Hearing. National Institute on Deafness and Other Communication Disorders.

[2] Bizup B, et al. Cochlear zinc signaling dysregulation is associated with noise-induced hearing loss, and zinc chelation enhances cochlear recovery. PNAS. DOI: 10.1073/pnas.2310561121 (2024).

NIH Support: National Institute on Deafness and Other Communication Disorders, National Institute on Aging, National Institute of Biomedical Imaging and Bioengineering

Study Offers New Clues to Why Most People with Autoimmune Diseases Are Women

Posted on by Dr. Monica M. Bertagnolli

As many as 50 million Americans have one of more than 100 known autoimmune diseases, making it the third most prevalent disease category, surpassed only by cancer and heart disease.1,2 This category of disease has also long held a mystery: Why are most people with a chronic autoimmune condition—as many as four out of every five—women? This sex-biased trend includes autoimmune diseases such as rheumatoid arthritis, multiple sclerosis, scleroderma, lupus, Sjögren’s syndrome, and many others.

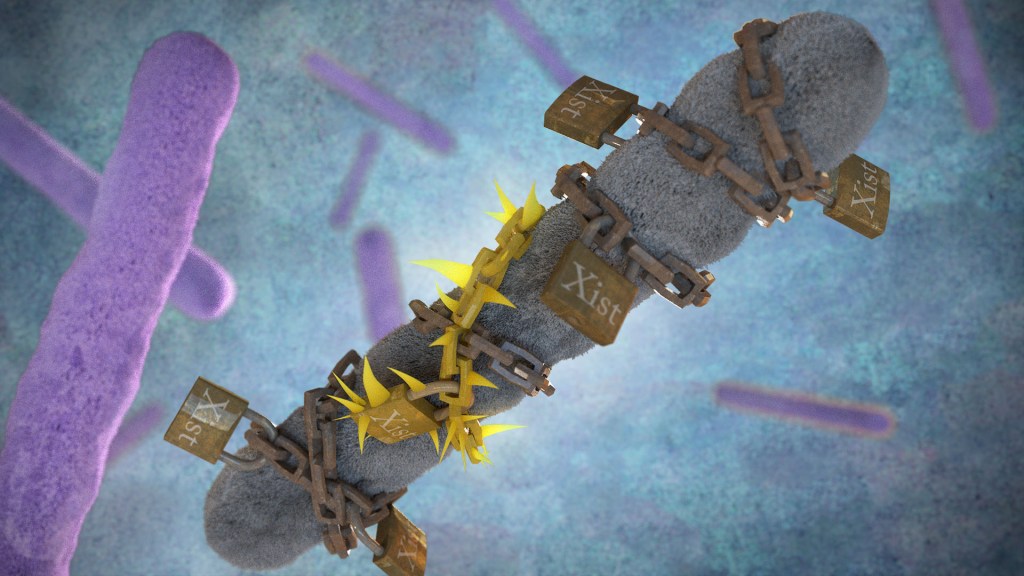

Now, exciting findings from a study supported in part by NIH provide a clue to why this may be the case, with potentially important implications for the early detection, treatment, and prevention of autoimmune diseases. The new evidence, reported in the journal Cell, suggests that more women develop autoimmune diseases than men due in part to the most fundamental difference between the biological sexes: that females have two X chromosomes, while males have an X and a Y. More specifically, it has to do with molecules called Xist (pronounced “exist”), which are encoded on the X chromosome and transcribed into long non-coding stretches of RNA, only when there are two X chromosomes.

Those long Xist molecules wind themselves around sections of just one of a female’s two X chromosomes, shutting down the extra X chromosome in a process known as X-chromosome inactivation. It’s an essential process to ensure those cells won’t produce too many proteins encoded on X chromosomes, which would be a deadly mistake. It’s also something that males, with a single X chromosome and much smaller Y chromosome carrying almost no working genes, don’t have to worry about.

The new findings come from a team at Stanford University School of Medicine, Stanford, CA, led by Howard Chang and Diana Dou. What they suggest is that while Xist molecules play an essential role in X-chromosome inactivation, they also have a more nefarious ability to encourage the formation of odd clumps of RNA, DNA, and proteins that can in turn trigger strong autoimmune responses.

In earlier research, the team identified about 80 different proteins that bind to Xist either directly or indirectly. After taking a close look at the list, the researchers realized that many of the proteins had been shown to play some role in autoimmune conditions. This raised an intriguing question: Could the reason women develop autoimmune diseases so much more often than men be explained by those Xist-containing clumps?

To test the idea, the researchers first decided to study it in male mice. They made two different strains of male mice produce Xist to see if it would increase their risk for autoimmunity in ways they could measure. And it did. The researchers found that once Xist was activated in male mice that were genetically prone to autoimmunity, they became more susceptible to developing a lupus-like condition. It didn’t happen in every individual, which suggests, not surprisingly, that the development of autoimmune disease requires additional triggers as well.

In addition, in a different mouse strain that was resistant to developing autoimmunity, the addition of Xist in males wasn’t enough to cause autoimmunity, the researchers found. That also makes sense in that, while women are much more prone to developing autoimmune disease, most people don’t. Xist complexes likely lead to autoimmunity only when certain genetic and other factors are met.

The researchers also examined blood samples from 100 people with autoimmune conditions and found they had antibodies to many of their own Xist complexes. Some of those antibodies also appeared specific to a certain autoimmune disorder, suggesting that they might be useful for tests that could detect autoimmunity or particular autoimmune conditions even before symptoms arise.

There are still many questions to explore in future research, including why men sometimes do get autoimmune conditions, and what other key triggers drive the development of autoimmunity. But this fundamentally important discovery points to potentially new ways to think about the causes for the autoimmune conditions that affect so many people in communities here and around the world.

References:

[1] The American Autoimmune Related Diseases Association. Autoimmune Facts.

[2] Dou DR, et al. Xist ribonucleoproteins promote female sex-biased autoimmunity. Cell. DOI: 10.1016/j.cell.2023.12.037. (2024).

NIH Support: National Institute of Arthritis and Musculoskeletal and Skin Diseases

Understanding Childbirth Through a Single-Cell Atlas of the Placenta

Posted on by Dr. Monica M. Bertagnolli

While every birth story is unique, many parents would agree that going into labor is an unpredictable process. Although most pregnancies last about 40 weeks, about one in every 10 infants in the U.S. are born before the 37th week of pregnancy, when their brain, lungs, and liver are still developing.1 Some pregnancies also end in an unplanned emergency caesarean delivery after labor fails to progress, for reasons that are largely unknown. Gaining a better understanding of what happens during healthy labor at term may help to elucidate why labor doesn’t proceed normally in some cases.

In a recent development, NIH scientists and their colleagues reported some fascinating new findings that could one day give healthcare providers the tools to better understand and perhaps even predict labor.2 The research team produced an atlas showing the patterns of gene activity that take place in various cell types during labor. To create the atlas, they examined tissues from the placentas of 18 patients not in labor who underwent caesarean delivery and 24 patients in labor. The researchers also analyzed blood samples from another cohort of more than 250 people who delivered at various timepoints. This remarkable study, published in Science Translational Medicine, is the first to analyze gene activity at the single-cell level to better understand the communication that occurs between maternal and fetal cells and tissues during labor.

The placenta is an essential organ for bringing nutrients and oxygen to a growing fetus. It also removes waste, provides immune protection, and supports fetal development. The placenta participates in the process of normal labor at term and preterm labor. Problems with the placenta can lead to many issues, including preterm birth. To create the placental atlas, the study team used an approach called single-cell RNA sequencing. Messenger RNA molecules transcribed or copied from DNA serve as templates for proteins, including those that send important signals between tissues. By sequencing RNAs at the single-cell level, it’s possible to examine gene activity and signaling patterns in many thousands of individual cells at once. This method allows scientists to capture and describe in detail the activities within individual cell types along with interactions among cells of different types and in immune or other key signaling pathways.

Using this approach, the researchers found that cells in the chorioamniotic membranes, which surround the fetus and rupture as part of the labor and delivery process, showed the greatest changes. They also found cells in the mother and fetus that were especially active in generating inflammatory signals. They note that these findings are consistent with previous research showing that inflammation plays an important role in sustaining labor.

Gene activity patterns and changes in the placenta can only be studied after the placenta is delivered. However, it would be ideal if these changes could be identified in the bloodstream of mothers earlier in pregnancy—before labor—so that health care providers can intervene if necessary. The recent study showed that this was possible: Certain gene activity patterns observed in placental cells during labor could be detected in blood tests of women earlier in pregnancy who would later go on to have a preterm birth. The authors note that more research is needed to validate these findings before they can be used as a clinical tool.

Overall, these findings offer important insight into the underlying biology that normally facilitates healthy labor and delivery. They also offer preliminary proof-of-concept evidence that placental biomarkers present in the bloodstream during pregnancy may help to identify pregnancies at increased risk for preterm birth. While much more work and larger studies are needed, these findings suggest that it may one day be possible to identify those at risk for a difficult or untimely labor, when there is still opportunity to intervene.

The research was conducted by the Pregnancy Research Branch part of the Eunice Kennedy Shriver National Institute of Child Health and Human Development (NICHD), and led by Roberto Romero, M.D., D.Med.Sci., NICHD; Nardhy Gomez-Lopez, Ph.D., Washington University School of Medicine in St. Louis; and Roger Pique-Regi, Ph.D., Wayne State University, Detroit.

References:

[1] Preterm Birth. CDC.

[2] Garcia-Flores V, et al., Deciphering maternal-fetal crosstalk in the human placenta during parturition using single-cell RNA sequencing. Science Translational Medicine DOI: 10.1126/scitranslmed.adh8335 (2024).

NIH Support: Eunice Kennedy Shriver National Institute of Child Health and Human Development

What’s Behind that Morning Migraine? Community-Based Study Points to Differences in Perceived Sleep Quality, Energy on the Previous Day

Posted on by Dr. Monica M. Bertagnolli

Headaches are the most common form of pain and a major reason people miss work or school. Recurrent attacks of migraine headaches can be especially debilitating, involving moderate to severe throbbing and pulsating pain on one side of the head that sometimes lasts for days. Migraines and severe headaches affect about 1 in 5 women and about 1 in 10 men, making them one of the most prevalent of all neurological disorders.1 And yet there’s still a lot we don’t know about what causes headaches or how to predict when one is about to strike.

Now a new NIH-led study reported in the journal Neurology has some important insight.2 One of the things I especially appreciate about this new work is that it was conducted in a community setting rather than through a specialty clinic, with people tracking their own headache symptoms, sleep, mood, and more on a mobile phone app while they went about their daily lives. It means that the findings are extremely relevant to the average migraine sufferer who shows up in a primary care doctor’s office looking for help for their recurrent headaches.

The study, led by Kathleen Merikangas at NIH’s National Institute of Mental Health, Bethesda, MD, is part of a larger, community-based Family Study of Affective and Anxiety Spectrum Disorders. This ongoing study enrolls volunteers from the greater Washington, D.C., area with a range of disorders, including bipolar disorders, major depression, anxiety disorders, sleep disorders, and migraine along with their immediate family members. It also includes people with none of these disorders who serve as a control group. The goal is to learn more about the frequency of mood and other mental and physical disorders in families and how often they co-occur. This information can provide insight into the nature and causes for all these conditions.

While there will be much more to come from this ambitious work, the primary aim for this latest study was to look for links between a person’s perceived mood, sleep, energy, and stress and their likelihood for developing a headache. The study’s 477 participants, aged 7 to 84, included people with and without migraines who were also assessed for mood, anxiety, sleep disorders and other physical conditions. Women accounted for 291 of the study’s participants. Each were asked to track their emotional states, including anxiousness, mood, energy, stress, and headaches four times each day for two weeks. Each morning, they also reported on their sleep the night before.

The data showed that people with a morning migraine reported poorer quality sleep the night before. They also reported lower energy the day before. Interestingly, those factors didn’t lead to an increased risk of headaches in the afternoon or evening. Afternoon or evening headaches were more often preceded by higher stress levels or having higher-than-average energy the day before.

More specifically, people with poorer perceived sleep quality on average had a 22 percent greater chance for a headache attack the next morning. A decrease in the self-reported usual quality of sleep was also associated with an 18 percent increased chance of a headache the next morning. Similarly, a drop in the usual level of energy on the prior day was associated with a 16 percent greater chance of headache the next morning. In contrast, greater average levels of stress and substantially higher energy than usual the day before was associated with a 17 percent increased chance of headache later the next day.

Surprisingly, the study didn’t find any connection between feeling anxious or depressed with headaches on the next day after considering energy and sleep. However, Merikangas emphasizes that participants’ perceived differences in energy and sleep may not reflect objective measures of sleep patterns or energy, suggesting that the connection may still be based on changes in a person’s feelings about their underlying physical or emotional state in complex ways.

The findings suggest that changes in the body and brain are already taking place before a person first feels a headache, suggesting it may be possible to predict and prevent migraines or other headaches. It also adds to evidence for the usefulness of diaries or apps for headache sufferers to track their sleep, health, behavioral, and emotional states in real time to better understand and manage headache pain. Meanwhile, the researchers report that they’re continuing to explore other factors that may precede and trigger headaches, including dietary factors, changes in a person’s physiology such as stress hormone levels, and environmental factors, including weather, seasonal changes, and geography.

References:

[1] American Headache Society. The Prevalence of Migraine and Severe Headache.

[2] Lateef TM, et al. Association Between Electronic Diary-Rated Sleep, Mood, Energy, and Stress With Incident Headache in a Community-Based Sample. Neurology. DOI: 10.1212/WNL.0000000000208102. (2024).

NIH Support: National Institute of Mental Health

Findings in Tuberculosis Immunity Point Toward New Approaches to Treatment and Prevention

Posted on by Dr. Monica M. Bertagnolli

Tuberculosis, caused by the bacteria Mycobacterium tuberculosis (Mtb), took 1.3 million lives in 2022, making it the second leading infectious killer around the world after COVID-19, according to the World Health Organization. Current TB treatments require months of daily medicine, and certain cases of TB are becoming increasingly difficult to treat because of drug resistance. While TB case counts had been steadily decreasing before the COVID-19 pandemic, there’s been an uptick in the last couple of years.

Although a TB vaccine exists and offers some protection to young children, the vaccine, known as BCG, has not effectively prevented TB in adults. Developing more protective and longer lasting TB vaccines remains an urgent priority for NIH. As part of this effort, NIH’s Immune Mechanisms of Protection Against Mycobacterium tuberculosis Centers (IMPAc-TB) are working to learn more about how we can harness our immune systems to mount the best protection against Mtb. And I’m happy to share some encouraging results now reported in the journal PLoS Pathogens, which show progress in understanding TB immunity and suggest additional strategies to fight this deadly bacterial infection in the future.1

Most vaccines work by stimulating our immune systems to produce antibodies that target a specific pathogen. The antibodies work to protect us from getting sick if we are ever exposed to that pathogen in the future. However, the body’s more immediate but less specific response against infection, called the innate immune system, serves as the first line of defense. The innate immune system includes cells known as macrophages that gobble up and destroy pathogens while helping to launch inflammatory responses that help you fight an infection.

In the case of TB, here’s how it works: If you were to inhale Mtb bacteria into your lungs, macrophages in tiny air sacs called alveoli would be the first to encounter it. When these alveolar macrophages meet Mtb for the first time, they don’t mount a strong attack against them. In fact, Mtb can infect these immune macrophages to produce more bacteria for a week or more.

What this intriguing new study led by Alissa Rothchild at the University of Massachusetts Amherst and colleagues from Seattle Children’s Research Institute suggests is that vaccines could target this innate immune response to change the way macrophages in the lungs respond and bolster overall defenses. How would it work? While scientists are just beginning to understand it, it turns out that the adaptive immune system isn’t the only part of our immune system that’s capable of adapting. The innate immune system also can undergo long-term changes, or remodeling, based on its experiences. In the new study, the researchers wanted to explore the various ways alveolar macrophages could respond to Mtb.

In search of ways to do it, the study’s first author, Dat Mai at Seattle Children’s Research Institute, conducted studies in mice. The first group of mice received the BCG vaccine. In the second model, the researchers put Mtb into the ears of mice to cause a persistent but contained infection in their lymph nodes. They’d earlier shown that this contained Mtb infection affords animals some protection against subsequent Mtb infections. A third group of mice—the control group—did not receive any intervention. Weeks later, all three groups of mice were exposed to aerosol Mtb infection under controlled conditions. The researchers then sorted infected macrophages from their lungs for further study.

Alveolar macrophages from the first two sets of mice showed a strong inflammatory response to subsequent Mtb exposure. However, those responses differed: The macrophages from vaccinated mice turned on one type of inflammatory program, while macrophages from mice exposed to the bacteria itself turned on another type. Further study showed that the different exposure scenarios led to other discernable differences in the macrophages that now warrant further study.

The findings show that macrophages can respond significantly differently to the same exposure based on what has happened in the past. They complement earlier findings that BCG vaccination can also lead to long-term effects on other subsets of innate immune cells, including myeloid cells from bone marrow.2,3 The researchers suggest there may be ways to take advantage of such changes to devise new strategies for preventing or treating TB by strengthening not just the adaptive immune response but the innate immune response as well.

As part of the IMPAc-TB Center led by Kevin Urdahl at Seattle Children’s Research Institute, the researchers are now working with Gerhard Walzl and Nelita du Plessis at Stellenbosch University in South Africa to compare the responses in mice to those in human alveolar macrophages collected from individuals across the spectrum of TB disease. As they and others continue to learn more about TB immunity, the hope is to apply these insights toward the development of new vaccines that could combat this disease more effectively and ultimately save lives.

References:

[1] Mai D, et al. Exposure to Mycobacterium remodels alveolar macrophages and the early innate response to Mycobacterium tuberculosis infection. PLoS Pathogens. DOI: 10.1371/journal.ppat.1011871. (2024).

[2] Kaufmann E, et al. BCG Educates Hematopoietic Stem Cells to Generate Protective Innate Immunity against Tuberculosis. Cell. DOI: 10.1016/j.cell.2017.12.031 (2018).

[3] Lange C, et al. 100 years of Mycobacterium bovis bacille Calmette-Guérin. Lancet Infectious Diseases. DOI: 10.1016/S1473-3099(21)00403-5. (2022).

NIH Support: National Institute of Allergy and Infectious Diseases

New Findings in Football Players May Aid the Future Diagnosis and Study of Chronic Traumatic Encephalopathy (CTE)

Posted on by Dr. Monica M. Bertagnolli

Repeated hits to the head—whether from boxing, playing American football or experiencing other repetitive head injuries—can increase someone’s risk of developing a serious neurodegenerative condition called chronic traumatic encephalopathy (CTE). Unfortunately, CTE can only be diagnosed definitively after death during an autopsy of the brain, making it a challenging condition to study and treat. The condition is characterized by tau protein building up in the brain and causes a wide range of problems in thinking, understanding, impulse control, and more. Recent NIH-funded research shows that, alarmingly, even young, amateur players of contact and collision sports can have CTE, underscoring the urgency of finding ways to understand, diagnose, and treat CTE.1

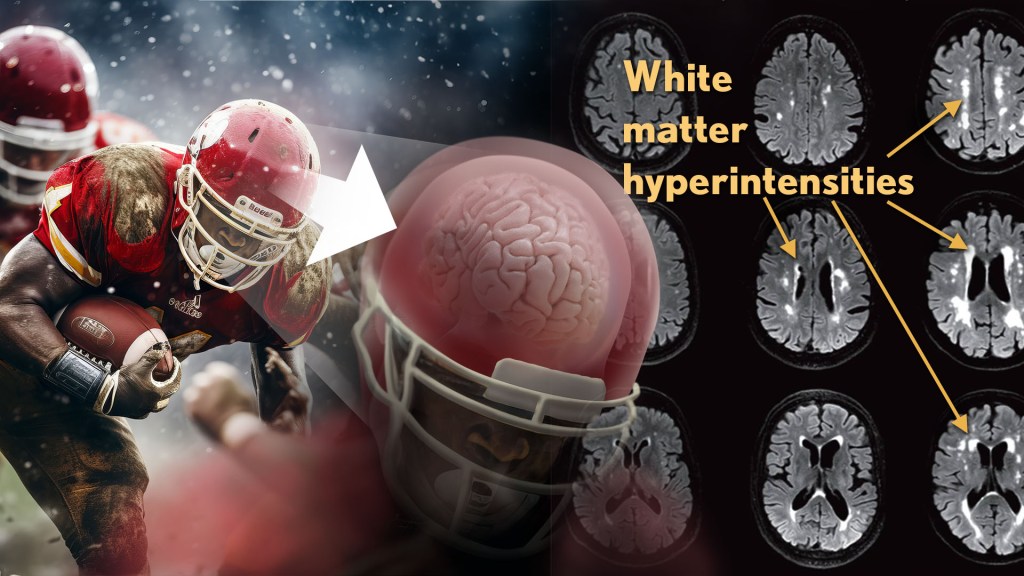

New findings published in the journal Neurology show that increased presence of certain brain lesions that are visible on MRI scans may be related to other brain changes in former football players. The study describes a new way to capture and analyze the long-term impacts of repeated head injuries, which could have implications for understanding signs of CTE. 2

The study analyzes data from the Diagnose CTE Research Project, an NIH-supported effort to develop methods for diagnosing CTE during life and to examine other potential risk factors for the degenerative brain condition. It involves 120 former professional football players and 60 former college football players with an average age of 57. For comparison, it also includes 60 men with an average age of 59 who had no symptoms, did not play football, and had no history of head trauma or concussion.

The new findings link some of the downstream risks of repetitive head impacts to injuries in white matter, the brain’s deeper tissue. Known as white matter hyperintensities (WMH), these injuries show up on MRI scans as easy-to-see bright spots.

Earlier studies had shown that athletes who had experienced repetitive head impacts had an unusual amount of WMH on their brain scans. Those markers, which also show up more as people age normally, are associated with an increased risk for stroke, cognitive decline, dementia and death. In the new study, researchers including Michael Alosco, Boston University Chobanian & Avedisian School of Medicine, wanted to learn more about WMH and their relationship to other signs of brain trouble seen in former football players.

All the study’s volunteers had brain scans and lumbar punctures to collect cerebrospinal fluid in search of underlying signs or biomarkers of neurodegenerative disease and white matter changes. In the former football players, the researchers found more evidence of WMH. As expected, those with an elevated burden of WMH were more likely to have more risk factors for stroke—such as high blood pressure, hypertension, high cholesterol, and diabetes—but this association was 11 times stronger in former football players than in non-football players. More WMH was also associated with increased concentrations of tau protein in cerebrospinal fluid, and this connection was twice as strong in the football players vs. non-football players. Other signs of functional breakdown in the brain’s white matter were more apparent in participants with increased WMH, and this connection was nearly quadrupled in the former football players.

These latest results don’t prove that WMH from repetitive head impacts cause the other troubling brain changes seen in football players or others who go on to develop CTE. But they do highlight an intriguing association that may aid the further study and diagnosis of repetitive head impacts and CTE, with potentially important implications for understanding—and perhaps ultimately averting—their long-term consequences for brain health.

References:

[1] AC McKee, et al. Neuropathologic and Clinical Findings in Young Contact Sport Athletes Exposed to Repetitive Head Impacts. JAMA Neurology. DOI:10.1001/jamaneurol.2023.2907 (2023).

[2] MT Ly, et al. Association of Vascular Risk Factors and CSF and Imaging Biomarkers With White Matter Hyperintensities in Former American Football Players. Neurology. DOI: 10.1212/WNL.0000000000208030 (2024).

NIH Support: National Institute of Neurological Disorders and Stroke, National Institute on Aging and the National Center for Advancing Translational Sciences

Previous Page Next Page